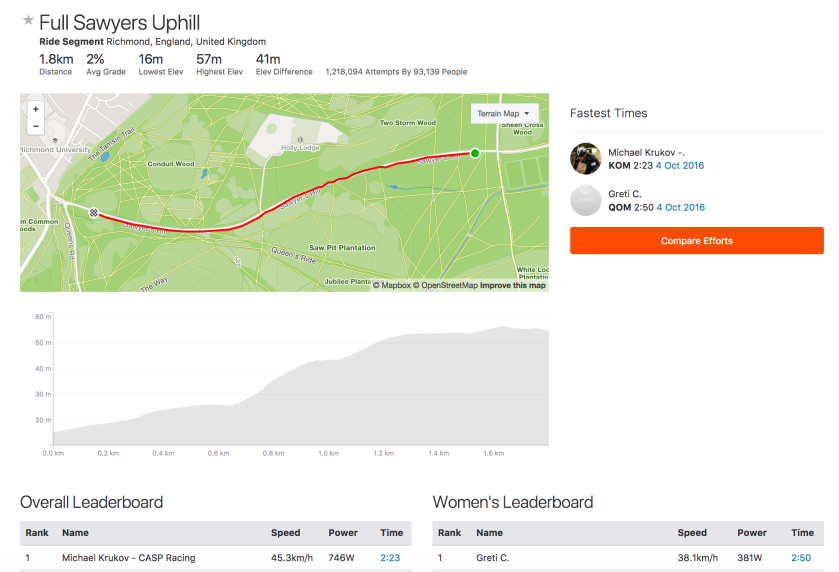

I guess that most male cyclists don’t pay much attention to the women’s leaderboards on Strava. And if they do it might just be to make some puerile remark about boys being better than girls. From a scientific perspective the comparison of male and female times leads to some interesting analysis.

Assuming both men and women have read my previous blogs on choosing the best time, weather conditions and wind directions for the segment that suits their particular strengths, we come back to basic physics.

KOM or QOM time = Work done / Power = (Work against gravity + Drag x Distance + Rolling resistance x Distance) / (Mass x Watt/kg)

Of the three components of work done, rolling resistance tends to be relatively insignificant. On a very steep hill, most of the work is done against gravity, whereas on a flat course, aerodynamic drag dominates.

The two key factors that vary between men and women are mass and power to weight ratio (watts per kilo). A survey published by the ONS in 2010, rather shockingly reported that the average British man weighed 83.6kg, with women coming in at 70.2kg. This gives a male/female ratio of 1.19. KOM/QOM cyclists would tend to be lighter than this, but if we take 72kg and 60kg, the ratio is still 1.20.

Males generate more watts per kilogram due to having a higher proportion of lean muscle mass. Although power depends on many factors, including lungs, heart and efficiency of circulation, we can estimate the relative power to weight ratio by comparing the typical body composition of males and females. Feeding the ONS statistics into the Boer formula gives a lean body mass of 74% for men and 65% for women, resulting in a ratio of 1.13. This can be compared against the the useful table on Training Peaks showing maximal power output in Watts/kg, for men and women, over different time periods and a range of athletic abilities. The table is based on the rows showing world record performances and average untrained efforts. For world champion five minute efforts and functional threshold powers, the ratios are consistent with the lean mass ratio. It makes sense that the ratio should be higher for shorter efforts, where the male champions are likely to be highly muscular. Apparently the relative performance is precisely 1.21 for all durations in untrained people.

On a steep climb, where the work done against gravity dominates, the benefit of additional male muscle mass is cancelled by the fact that this mass must be lifted, so the difference in time between the KOM and the QOM is primarily due to relative power to weight ratio. However, being smaller, women suffer from the disadvantage that the inert mass of bike represents a larger proportion of the total mass that must be raised against gravity. This effect increases with gradient. Accounting for a time difference of up to 16% on the steepest of hills.

In contrast, on a flat segment, it comes down to raw power output, so men benefit from advantages in both mass and power to weight ratio. But power relates to the cube of the velocity, so the elapsed time scales inversely with the cube root of power. Furthermore, with smaller frames, women present a lower frontal area, providing a small additional advantage. So men can be expected to have a smaller time advantage of around 9%. In theory the advantage should continue to narrow as the gradient shifts downhill.

Theory versus practice

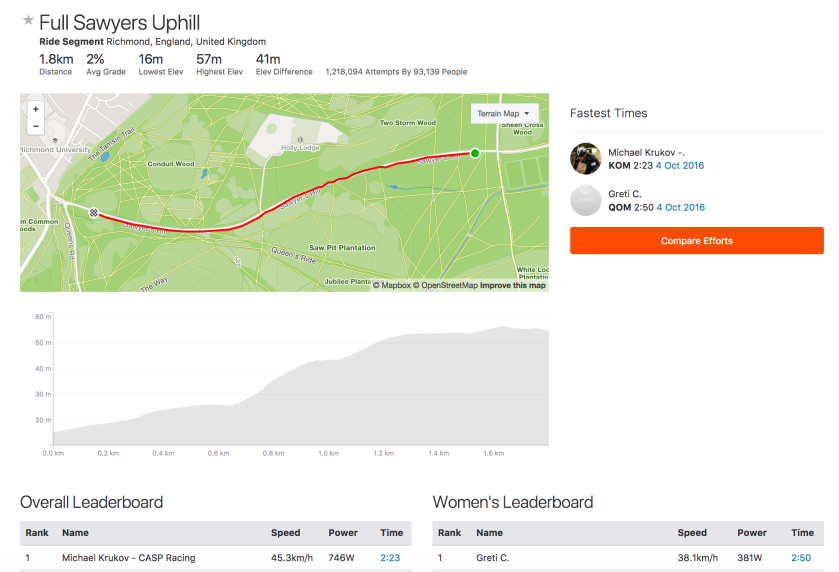

Strava publishes the KOM and QOM leaderboards for all segments, so it was relatively straightforward to check the basic model against a random selection of 1,000 segments across the UK. All leaderboards included at least 1,666 riders, with an overall average of 637 women and 5,030 men. One of the problems with the leaderboards is that they can be contaminated by spurious data, including unrealistic speeds or times set by groups riding together. To combat this, the average was taken of the top five times set on different dates, rather than simply to top KOM or QOM time.

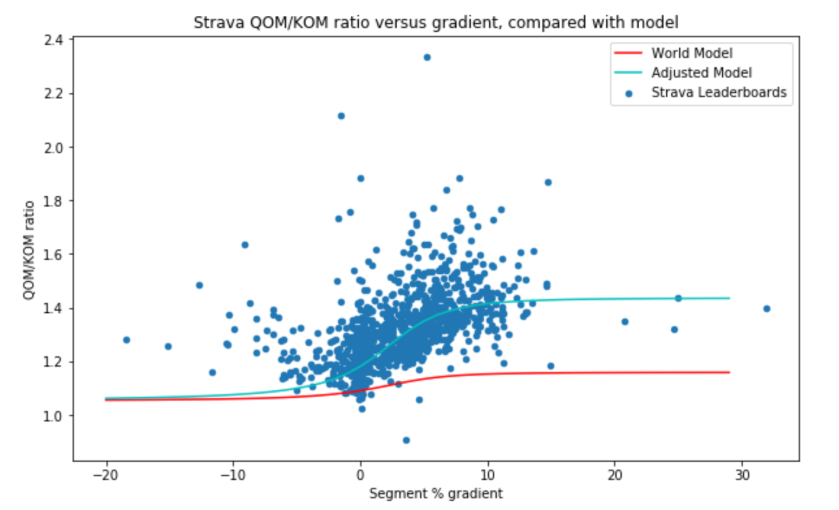

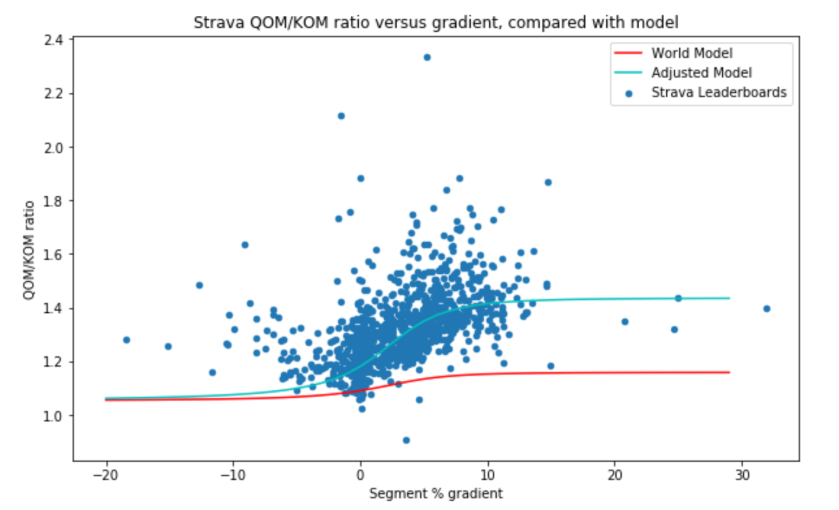

The average segment length was just under 2km, up a gradient of 3%. The following chart plots the ratio of the QOM time to the KOM time versus gradient compared with the model described above. The red line is based on the lean body mass/world record holders estimate of 1.13, whereas the average QOM/KOM ratio was 1.32. Although there is a perceivable upward slope in the data for positive gradients, clearly this does not fit the data.

Firstly, the points on the left hand side indicate that men go downhill much more fearlessly than women, suggesting a psychological explanation for the observations deviating from the model. To make the model fit better for positive gradients, there is no obvious reason to expect the weight ratio of male to female Strava riders to deviate from the general population, so this leaves only the relative power to weight ratio. According to the model the QOM/KOM ratio should level off to the power to weight ratio for steep gradients. This seems to occur for a value of around 1.40, which is much higher than the previous estimates of 1.13 or the 1.21 for untrained people. How can we explain this?

A notable feature of the data set was that sample of 1,000 Strava segments was completed by nearly eight times as many men as women. This, in turn reflects the facts that there are more male than female cyclists in the UK and that men are more likely to upload, analyse, publicise and gloat over their performances than women.

Having more men than women, inevitably means that the sample includes more high level male cyclists than equivalent female cyclists. So we are not comparing like with like. Referring back to the Training Peaks table of expected power to weight ratios, a figure of 1.40 suggests we are comparing women of a certain level against men of a higher category, for example, “very good” women against “excellent” men.

A further consequence of having far more men than women is that is much more likely that the fastest times were recorded in the ideal conditions described in my previous blogs listed earlier.

Conclusions

There is room for more women to enjoy cycling and this will push up the standard of performance of the average amateur rider. This would enhance the sport in the same way that the industry has benefited as more women have joined the workforce.

In the

In the

Many commentators have been licking their lips at the prospect of head-to-head combat between Chris Froome and Tom Dumoulin at next year’s Tour de France. It is hard to make a comparison based on their results in 2017, because they managed to avoid racing each other over the entire season of UCI World Tour races, meeting only in the World Championship Individual Time Trial, where the Dutchman was victorious. But it is intriguing to ask how Dumoulin might have done in the Tour de France and the Vuelta or, indeed, how Froome might have fared in the Giro.

Many commentators have been licking their lips at the prospect of head-to-head combat between Chris Froome and Tom Dumoulin at next year’s Tour de France. It is hard to make a comparison based on their results in 2017, because they managed to avoid racing each other over the entire season of UCI World Tour races, meeting only in the World Championship Individual Time Trial, where the Dutchman was victorious. But it is intriguing to ask how Dumoulin might have done in the Tour de France and the Vuelta or, indeed, how Froome might have fared in the Giro.