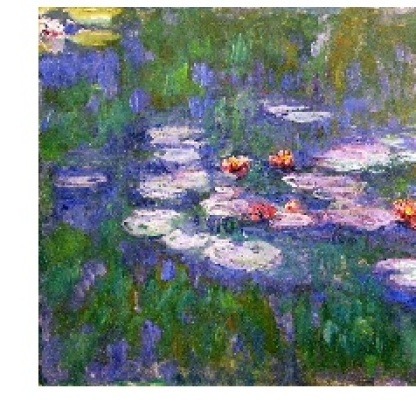

fast.ai’s latest version of Practical Deep Learning for Coders Part 2 kicks off with a review of Stable Diffusion. This is a deep neural network architecture developed by Stability AI that is able to convert text into images. With a bit of tweaking it can do all sorts of other things. Inspired by the amazing videos created by Softology, I set out to generate a dreamlike video based on the idea of riding my bicycle around a stage of the Giro d’Italia.

Text to image

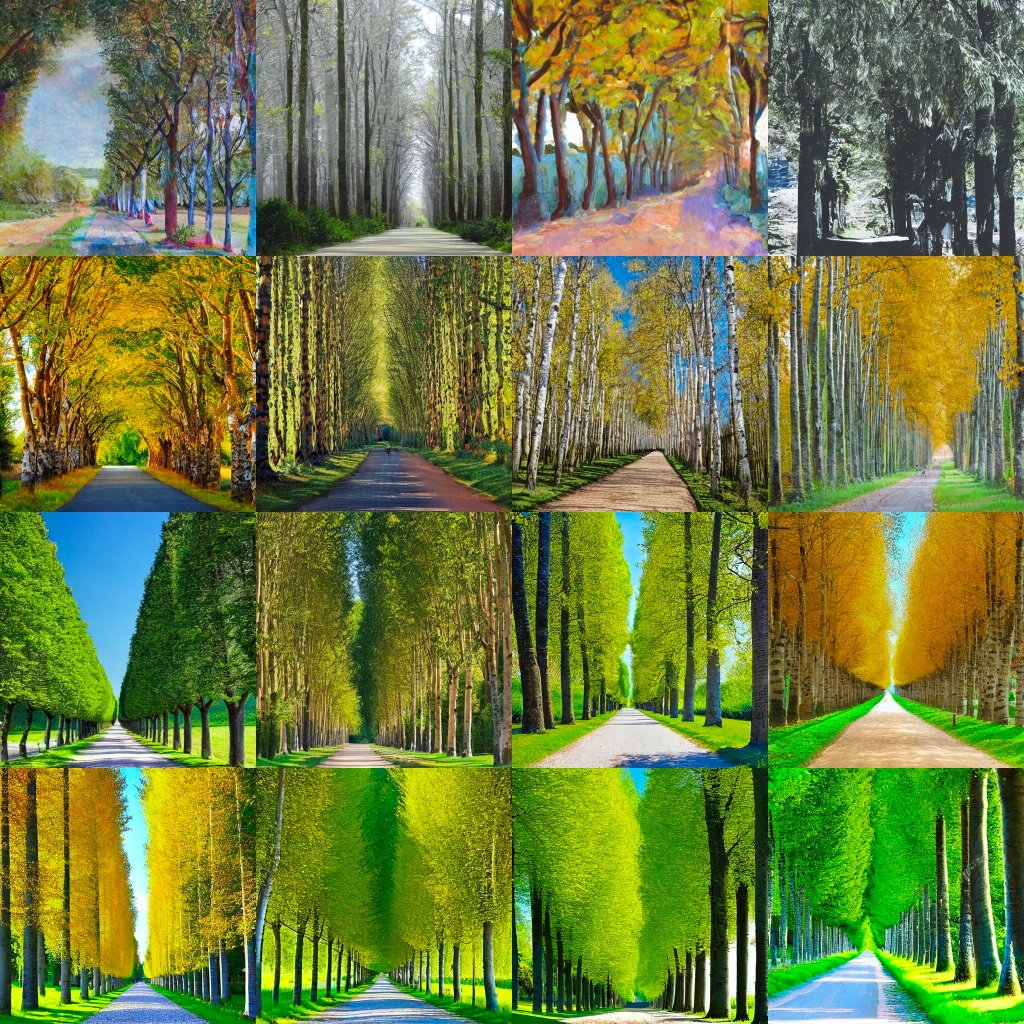

As mentioned in a previous post, Hugging Face is a fantastic resource for open source models. I worked with one of fast.ai’s notebooks using a free GPU on Google Colab. In the first step I set up a text-t0-image pipeline using a pre-trained version of stable-diffusion-v1-4. The prompt “a treelined avenue, poplars, summer day, france” generated the following images, where model was more strongly guided by the prompt in each row. I liked the first image in the second row, so I decided to make this the first frame in an initial test video.

Stable diffusion is trained in a multimodal fashion, by aligning text embeddings with the encoded versions corresponding images. Starting with random noise, the pixels are progressively modified in order to move the encoding of the noisy image closer to something that matches the embedding of the text prompt.

Zooming in

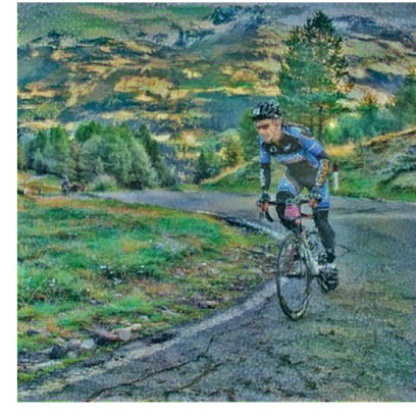

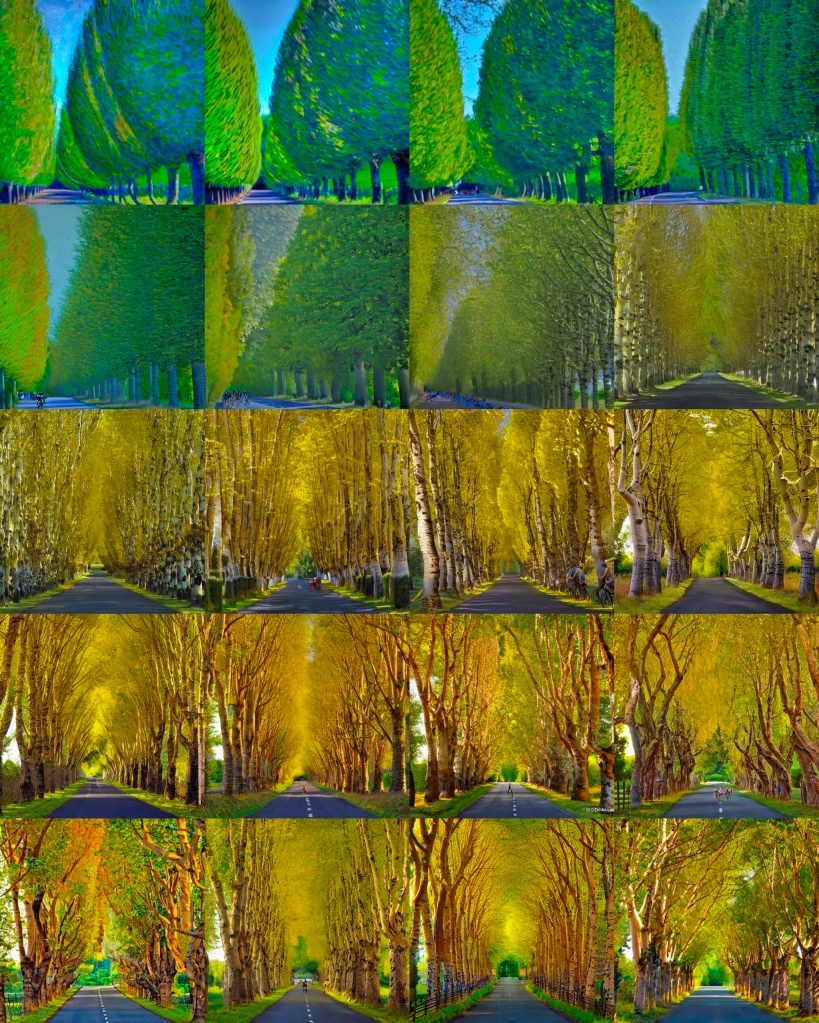

The next step was to simulate the idea of moving forward along the road. I did this by writing a simple two-line function, using fast.ai tools, that cropped a small border off the edge of the image and then scaled it back up to the original size. In order to generate my movie, rather that starting with random noise, I wanted to use my zoomed-in image as the starting point for generating the next image. For this I needed to load up an image-to-image pipeline.

I spent about an hour experimenting with with four parameters. Zooming in by trimming only a couple of pixels around the edge created smoother transitions. Reducing the strength of additional noise enhanced the sense of continuity by ensuring that that subsequent images did not change too dramatically. A guidance scale of 7 forced the model to keep following prompt and not simply zoom into the middle of the image. The number of inference steps provided a trade-off between image quality and run time.

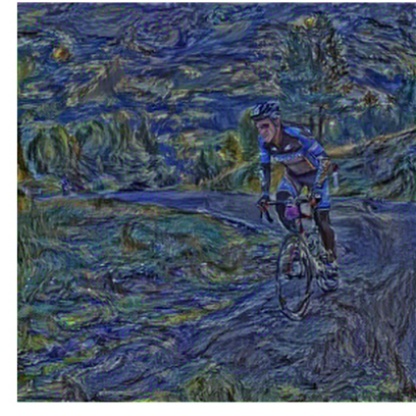

When I was happy, I generated a sequence of 256 images, which took about 20 minutes, and saved them as a GIF. This produced a pleasing, constantly changing effect with an impressionist style.

Back to where you started

In order to make the GIF loop smoothly, it was desirable to find a way to return to the starting image as part of the continuous zooming in process. At first it seemed that this might be possible by reversing the existing sequence of images and then generating a new sequence of images using each image in the reversed list as the next starting point. However, this did not work, because it gave the impression of moving backwards, rather than progressing forward along the road.

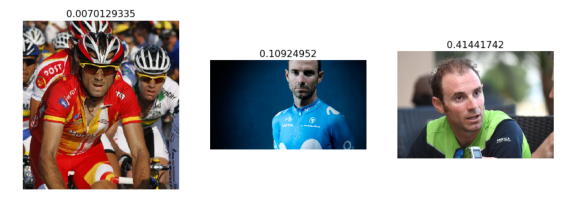

After thinking about the way stable diffusion works, it became apparent that I could return to the initial image by mixing it with the current image before taking the next step. By progressively increasing the mixing weight of the initial image, the generated images became closer to target over a desired number of steps as shown below.

Putting it al together produced the following video, which successfully loops back to its starting point. It is not a perfect animation, because the it zooms into the centre, whereas the vanishing point is below the centre of the image. This means we end up looking up at the trees at some points. But overall it had the effect I was after.

A stage of the Giro

Once all this was working, it was relatively straightforward to create a video that tells a story. I made a list of prompts describing the changing countryside of an imaginary stage of the Giro d’Italia, specifying the number of frames for each sequence. I chose the following.

[‘a wide street in a rural town in Tuscany, springtime’, 25],

[‘a road in the countryside, in Tuscany, springtime’,25],

[“a road by the sea, trees on the right, sunny day, Italy”,50],

[‘a road going up a mountain, Dolomites, sunny day’,50],

[‘a road descending a mountain, Dolomites, Italy’,25],

[‘a road in the countryside, cypress trees, Tuscany’,50],

[‘a narrow road through a medieval town in Tuscany, sunny day’,50]

These prompts produced the video shown at the top of this post. The springtime blossom in the starting town was very effective and the endless climb up into the sunlit Dolomites looked great. For some reason the seaside prompt did not work, so the sequence became temporarily stuck with red blobs. Running it again would make something different. Changing the prompts offered endless possibilities.

The code to run this appears on my GitHub page. If you have a Google account, you can open it directly in Colab and set the RunTime to GPU. You also need a free Hugging Face account to load the stable diffusion pipelines.