In my last post I described how to generate a series of images by feeding back the output of a Stable Diffusion image-to-image model as the input for the next image. I have now developed this into a Generative AI pipeline that creates music videos from the lyrics of songs.

Learning to animate

In my earlier blog about dreaming of the Giro, I saved a series of key frames in a GIF, resulting in an attractive stream of images, but the result was rather clunky. The natural next step was to improve the output by inserting frames to smooth out the transitions between the key frames, saving the result as a video in MP4 format, at a rate of 20 frames per second.

I started experimenting with a series of prompts, combined with the styles of different artists. Salvador Dali worked particularly well for dreamy animations of story lines. In the Dali example below, I used “red, magenta, pink” as a negative prompt to stop these colours swamping the image. The Kandinsky and Miro animations became gradually more detailed. I think these effects were a consequence of the repetitive feedback involved in the pipeline. The Arcimboldo portraits go from fish to fruit to flowers.

Demo app

A created a demo app on Hugging Face called AnimateYourDream. In order to get this to work, you need to duplicate it and then run it using a GPU on your Hugging Face account (costing $0.06 per hour). The idea was to try to recreate a dream I’d had the previous night. You can choose the artistic style, select an option to zoom in, enter three guiding prompts with the desired number of frames and choose a negative prompt. The animation process takes 3-5 minutes on a basic GPU.

For example, setting the style as “Dali surrealist”, zooming in, with 5 frames each of “landscape”, “weird animals” and “a castle with weird animals” produced the following animation.

Music videos

After spending some hours generating animations on a free Google Colab GPU and marvelling over the animations, I found that the images were brought to life by the music I was playing in the background. This triggered the brainwave of using the lyrics of songs as prompts for the Stable Diffusion model.

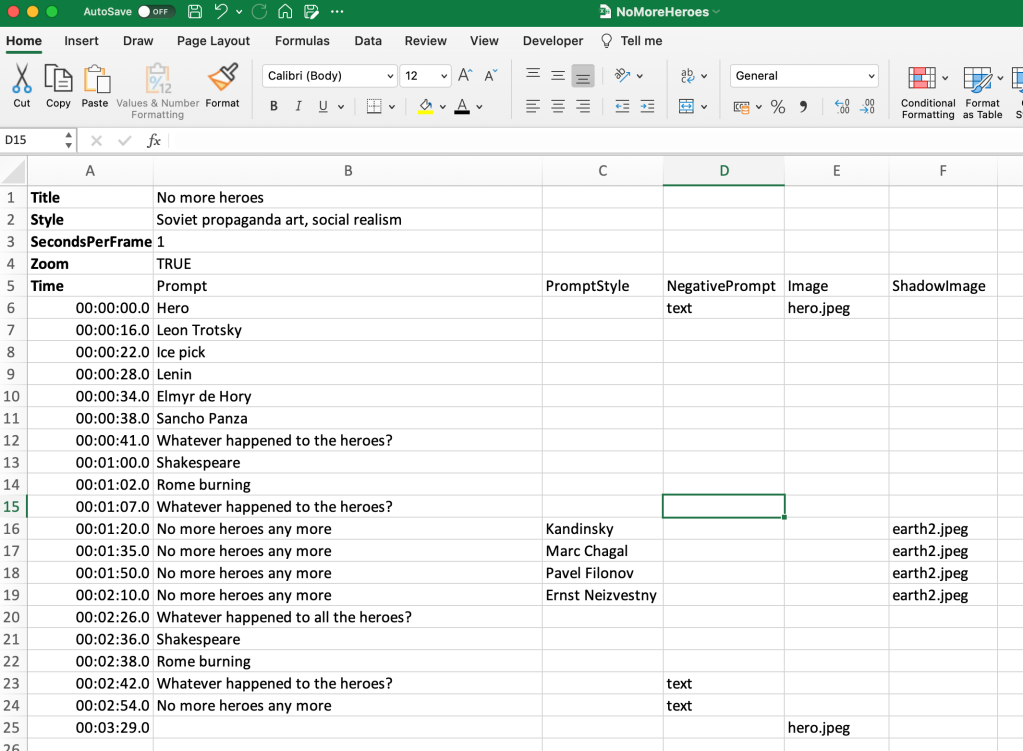

In order to produce an effective music video, I needed the images to change in time with the lyrics. Rather than messing around editing my Python code, I ended up using a Excel template spreadsheet as a convenient way to enter the lyrics alongside the time in the track. It was useful to enter “text” as a negative prompt and a sometimes helpful to mention a particular colour to stop it dominating the output. By default an overall style is added to each prompt, but it is convenient to change the style on certain prompts. By default the initial image is used as a “shadow”, which contributes 1% to every subsequent frame, in an attempt to retain an overall theme. This can also be overridden on each prompt.

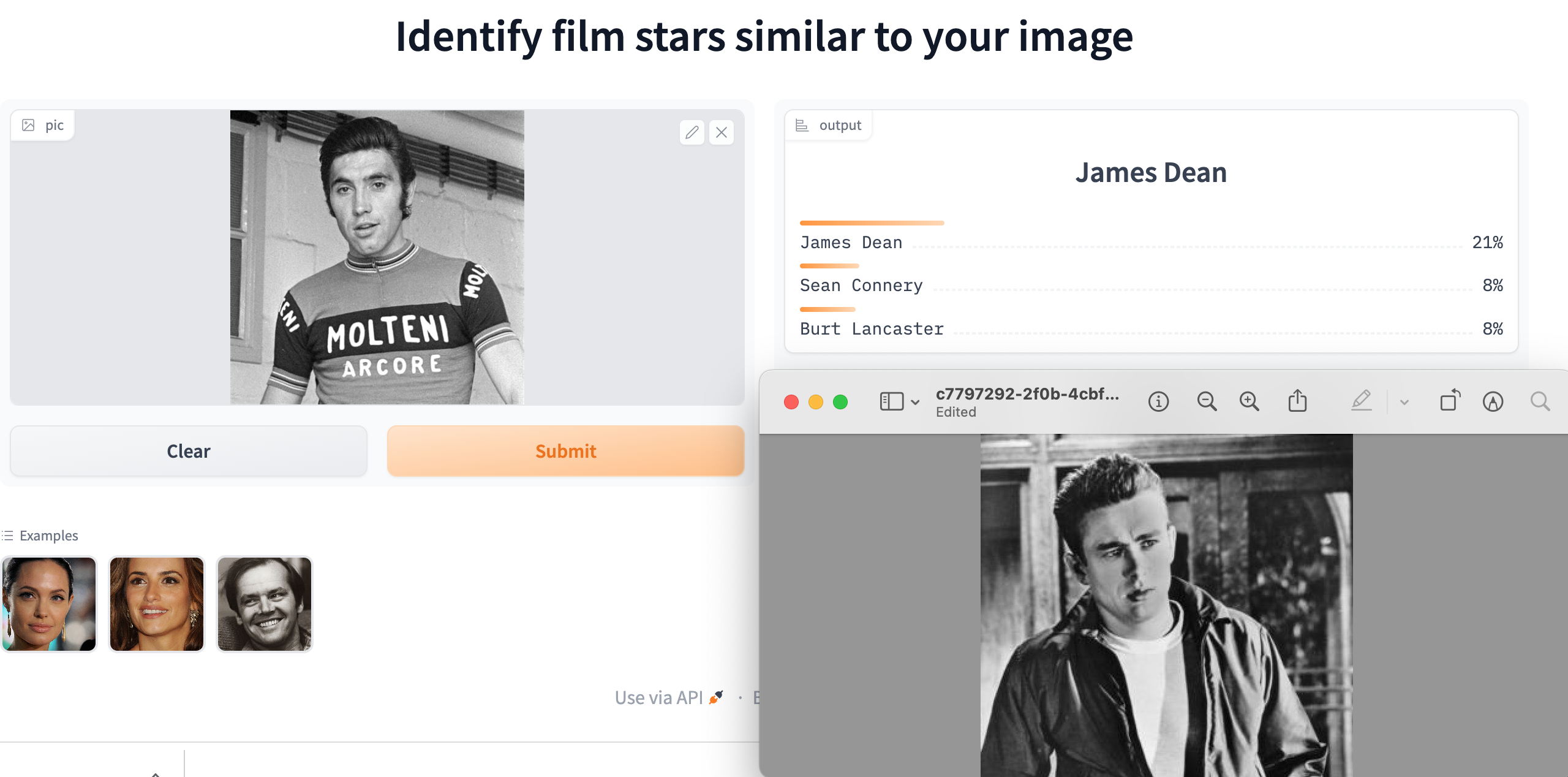

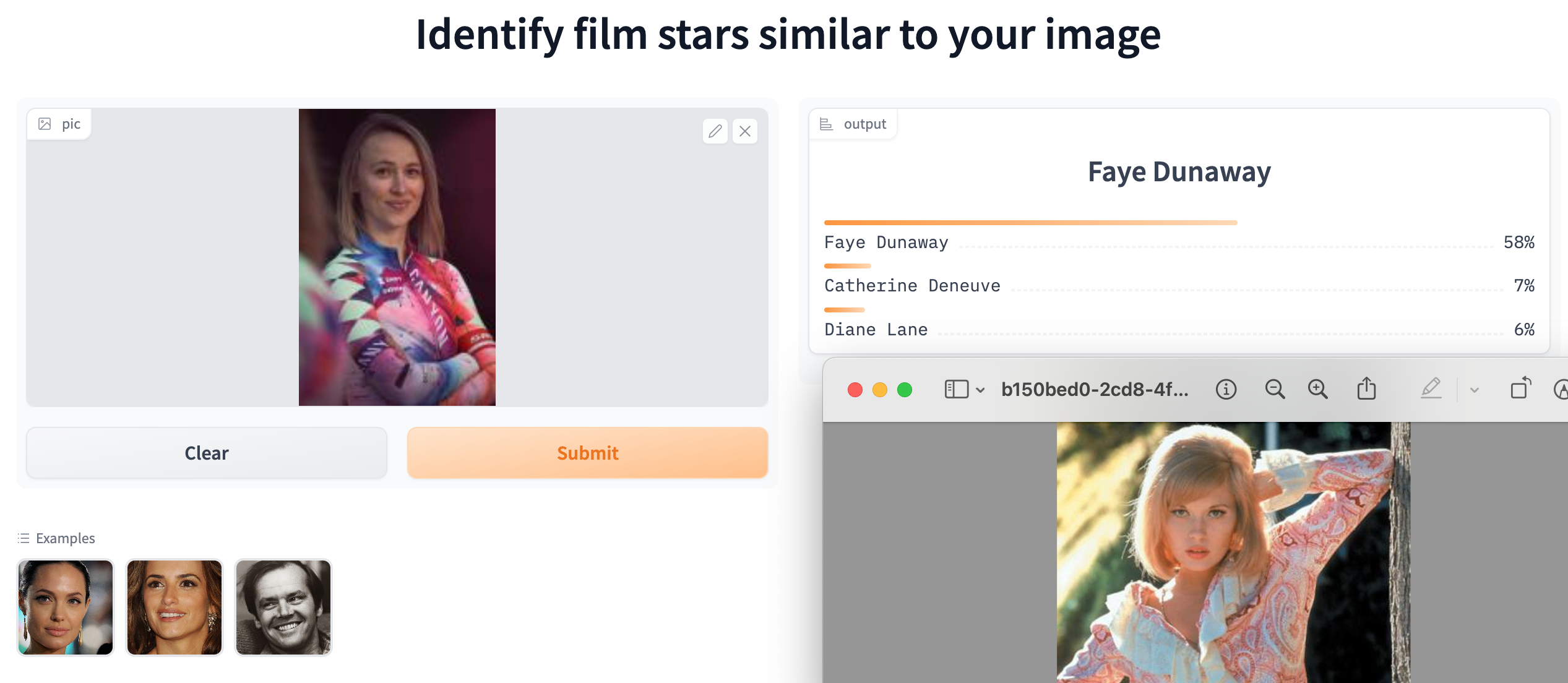

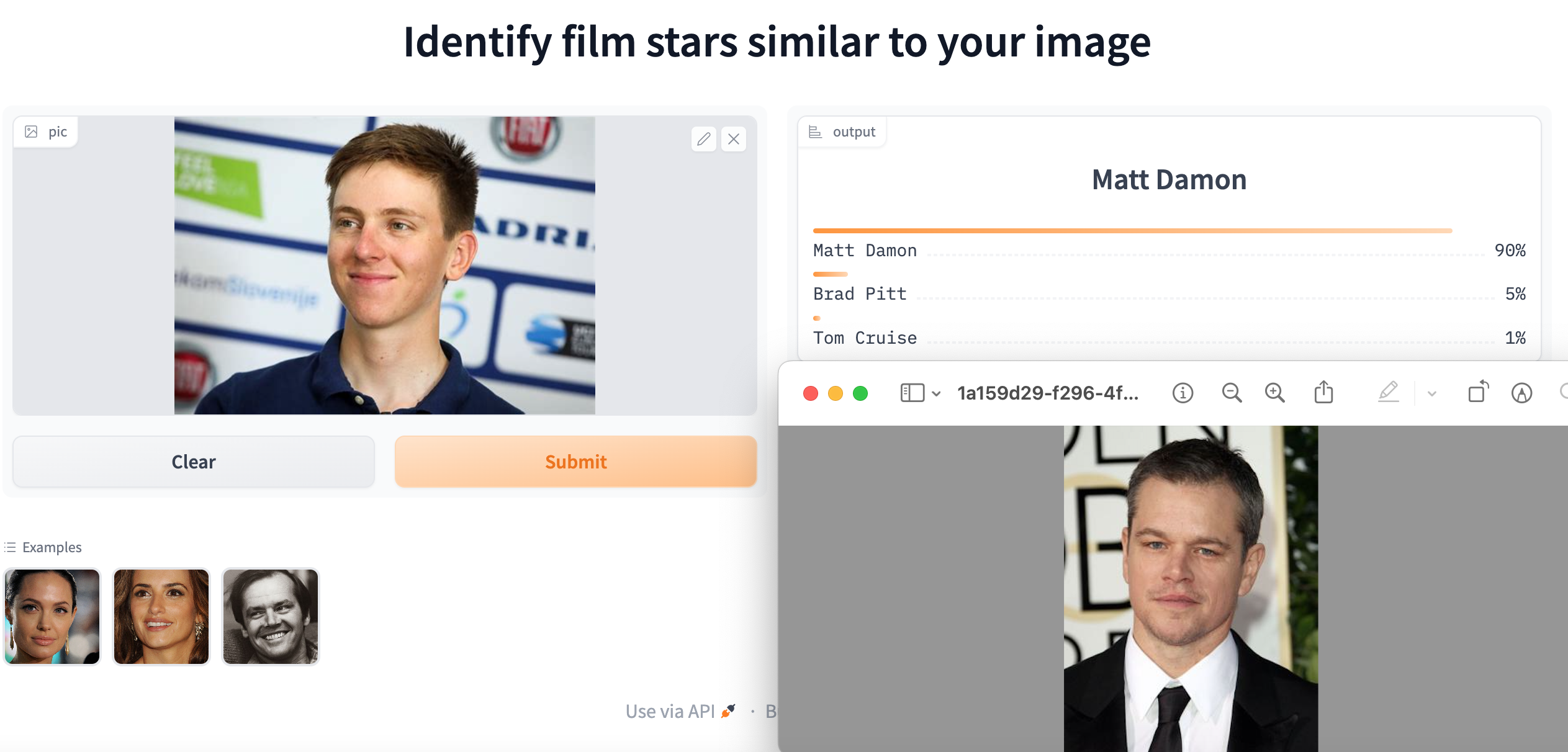

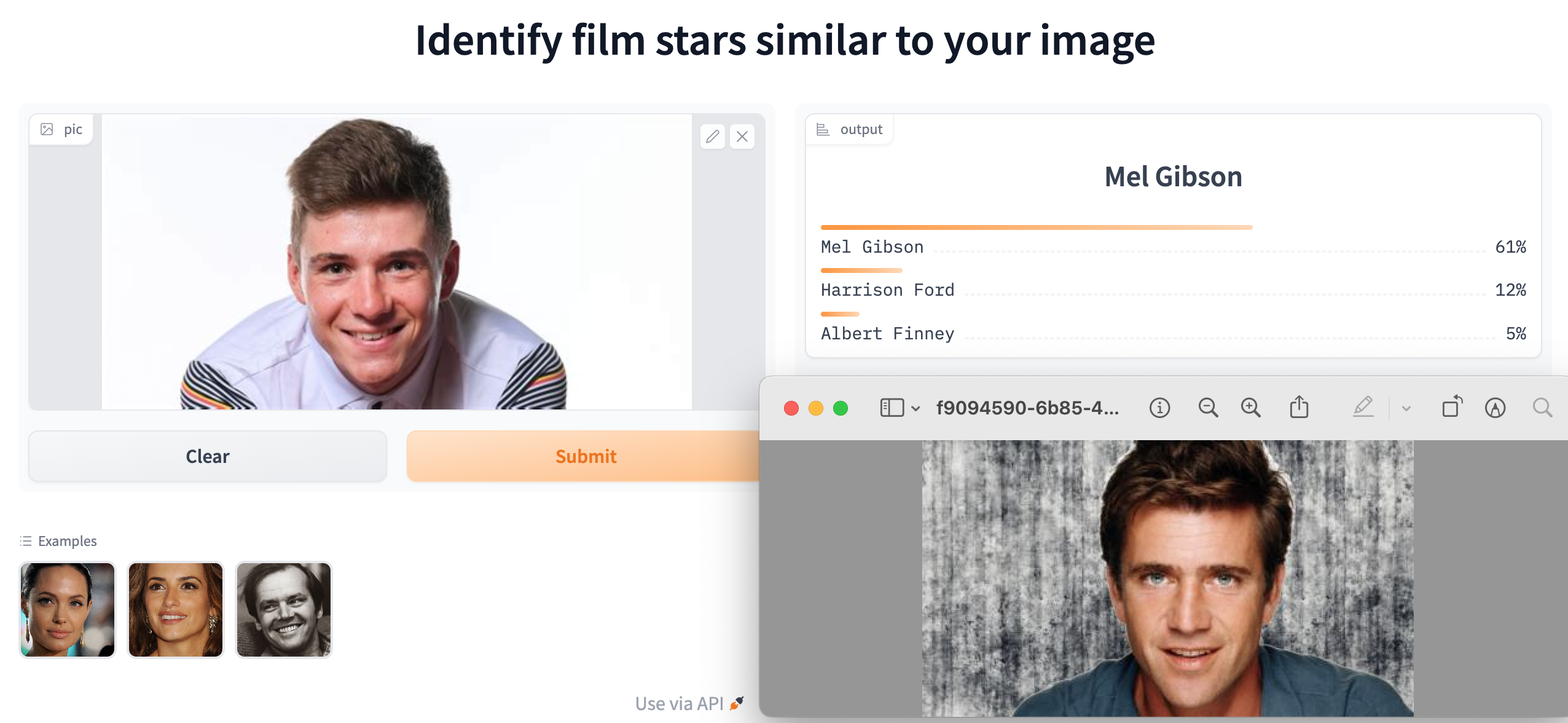

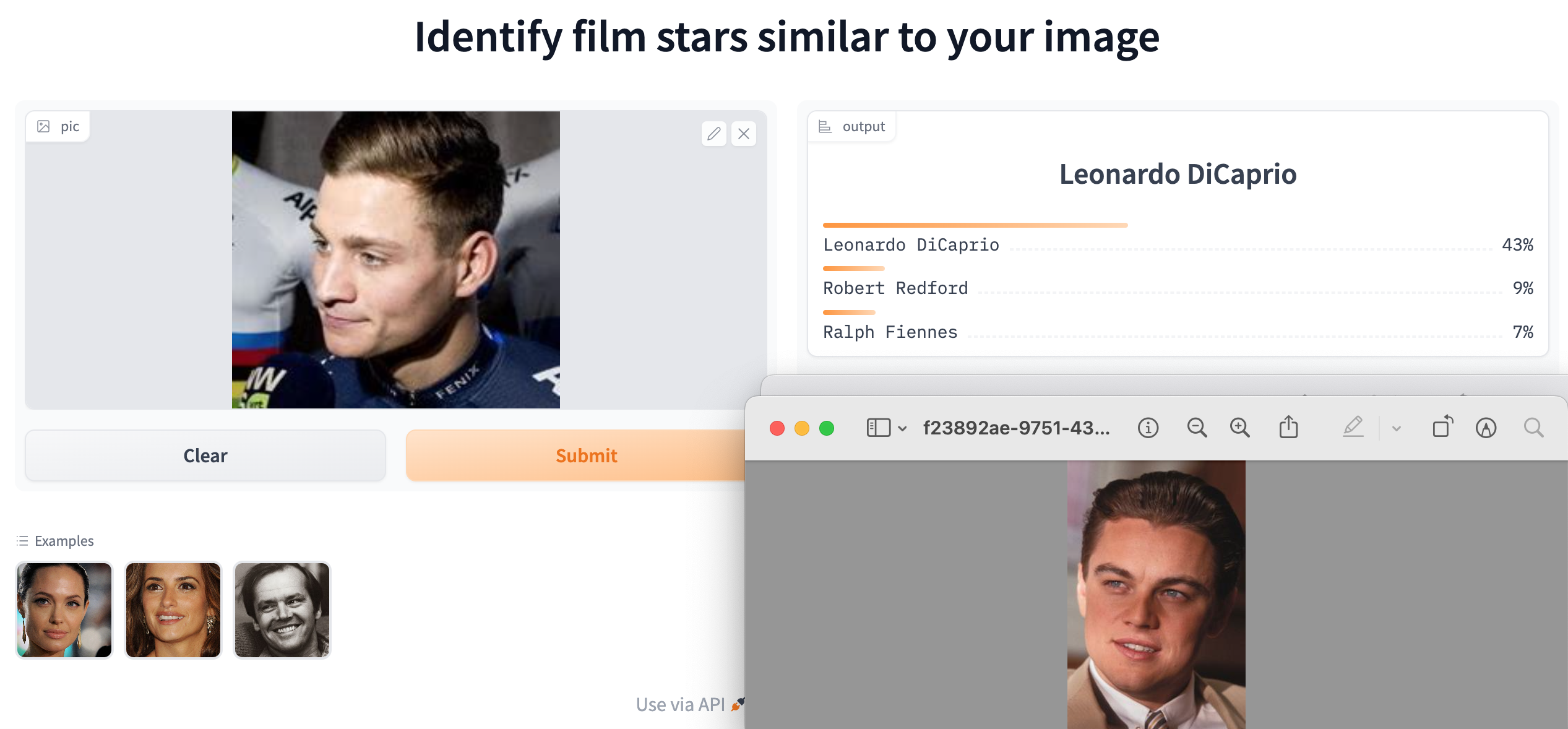

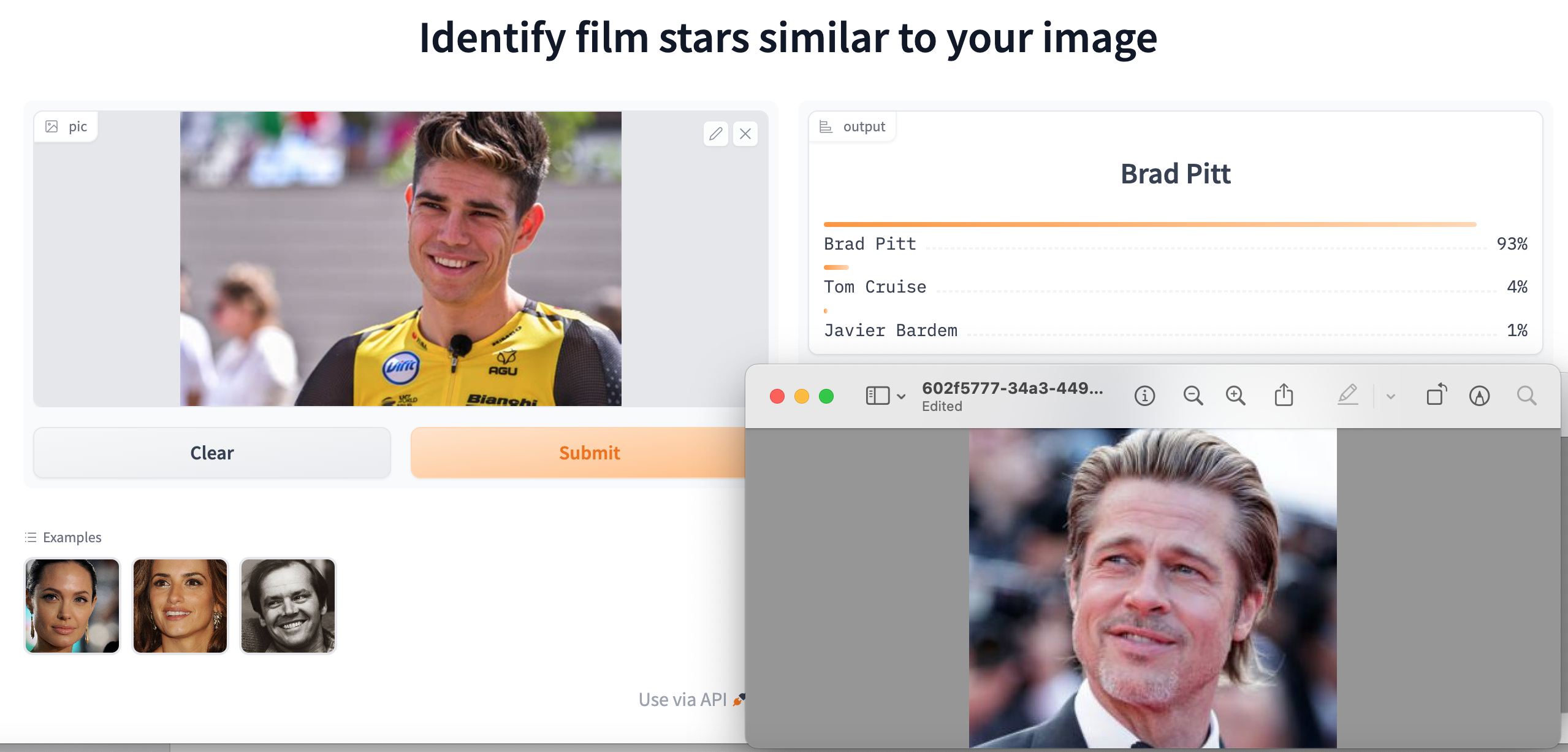

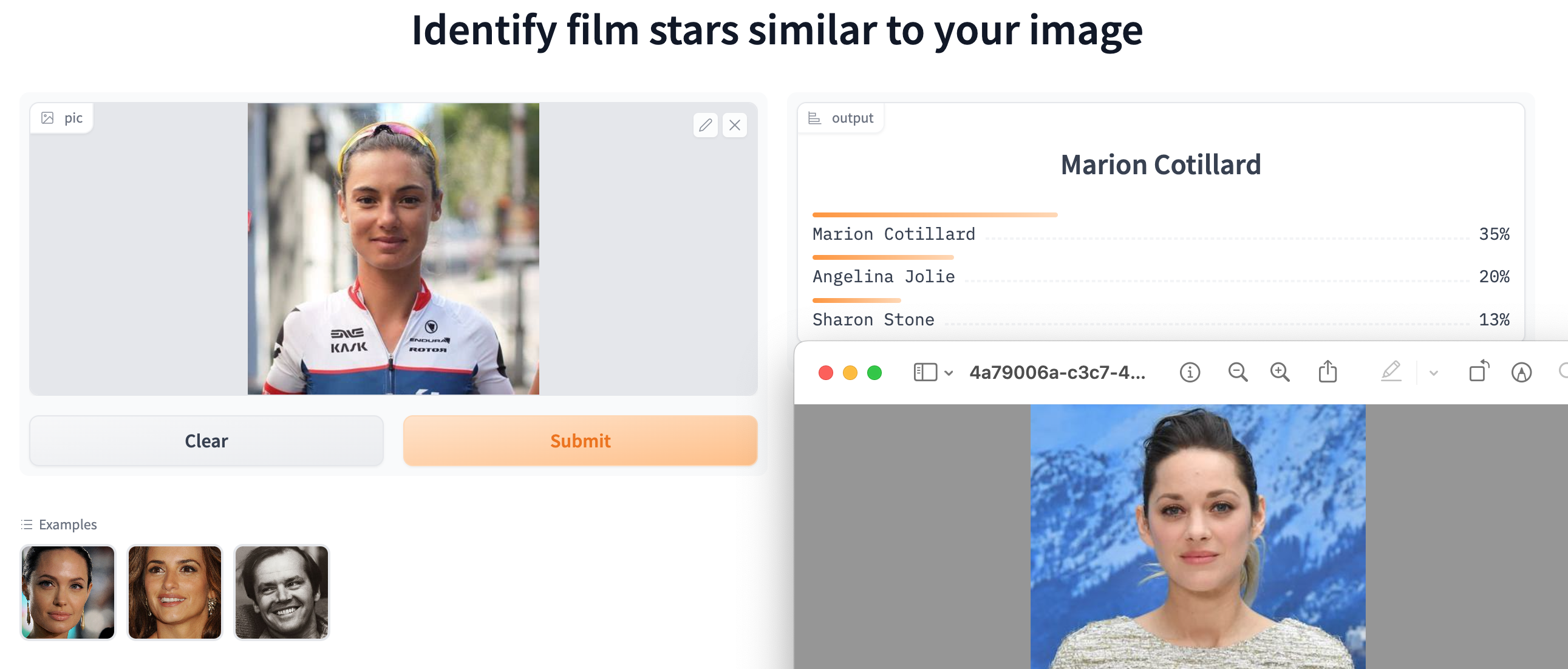

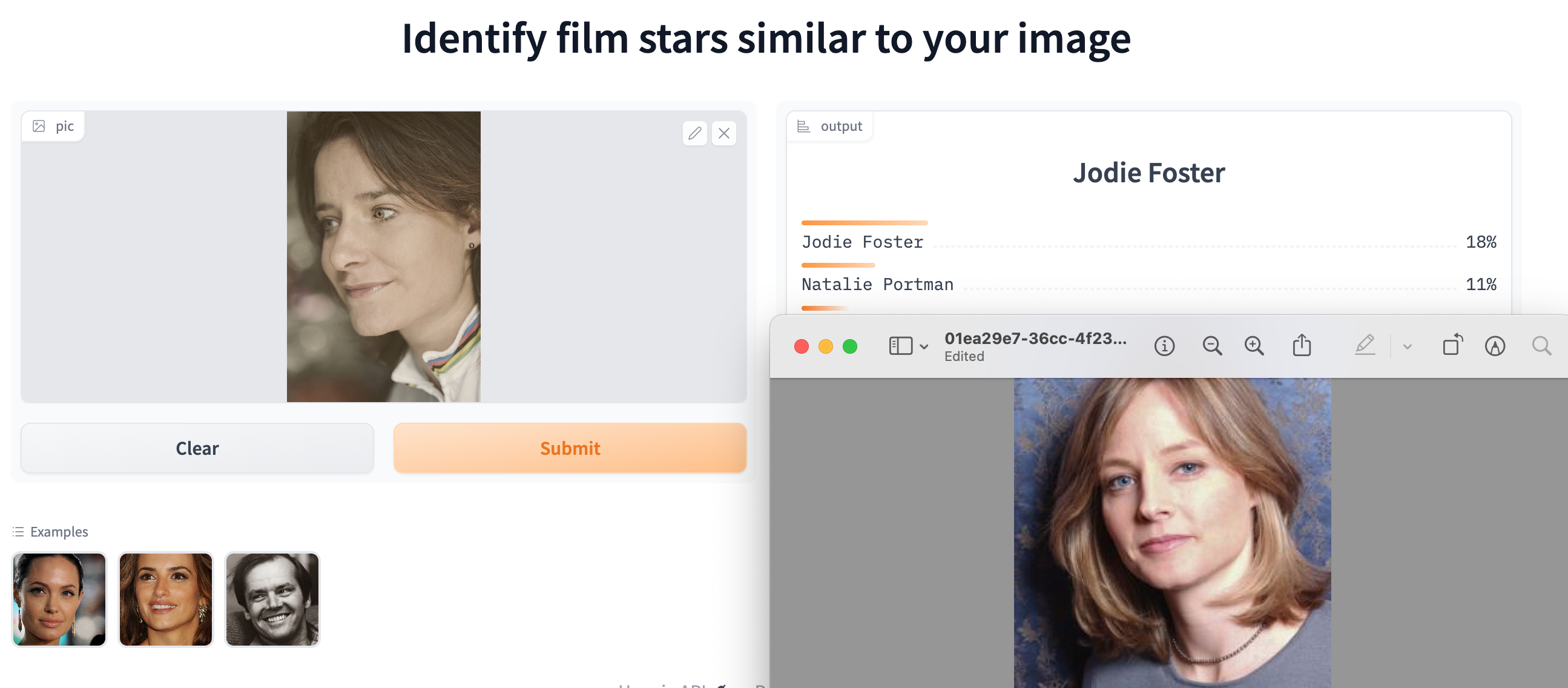

Finally, it was very useful to be able to define target images. If defined for the initial prompt, this saves loading an additional Stable Diffusion text-to-image pipeline to create the first frame. Otherwise, defining a target image for a particular prompt drags the animation towards the target, by mixing increasing proportions of the target with the current image, progressively from the previous prompt. This is also useful for the final frame of the animation. One way to create target images is to run a few prompts through Stable Diffusion here.

Although some lyrics explicitly mention objects that Stable Diffusion can illustrate, I found it helps to focus on specific key words. This is my template for “No more heroes” by The Stranglers. It produced an awesome video that I put on GitHub.

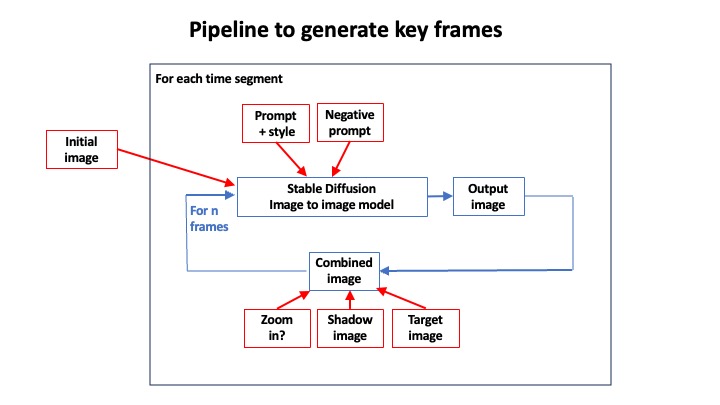

Once an Excel template is complete, the following pipeline generates the key frames by looping through each prompt and calculating how many frames are required to fill the time until the next prompt for the desired seconds per frame. A basic GPU takes about 3 seconds per key frame, so a song takes about 10-20 minutes, including inserting a smoothing steps between the key frames.

Sample files and a Jupyter notebook are posted on my GitHub repository.

I’ve started a YouTube channel

Having previously published my music on SoundCloud, I am now able to generate my own videos. So I have set up a YouTube channel, where you can find a selection of my work. I never expected the fast.ai course to lead me here.

PyData London

I presented this concept at the PyData London – 76th meetup on 1 August 2023. These are my slides.